Unlocking AI: Where It Actually Helps in Distributed Systems

Auto-documentation, debugging, and the hard-won lessons behind the hype

👋 Hi, this is Thomas, with a new issue of “Beyond Code: System Design and More”, where I geek out on all things system design, software architecture, distributed systems and… well, more.

QUOTE OF THE WEEK:

“In my lifetime, I’ve seen two demonstrations of technology that struck me as revolutionary: the GUI and ChatGPT” - (paraphrased) Bill Gates

AI is everywhere right now. Depending on who you ask, it’s either going to automate all of software engineering next quarter... or it’s all hype.

If you’ve actually used AI tools, you know the truth is somewhere in between, especially when you’re working with modern, distributed systems.

As a founder and engineer who’s worked with distributed systems for over two decades—and someone who’s been studying neural nets since the early 2000s—I’ve seen this pattern before.

AI does bring something different to the table. The question is where it truly helps, and where the hype gets ahead of reality.

In this post, I want to focus on two pain points that have followed me across every role: documentation and debugging. And how I’ve approached them using a combination of real-time visibility, system-aware tooling, and yes, also AI.

Why Docs and Debugging Still Hurt

We’ve all been there. You need to understand a system, and what you get is a grainy whiteboard photo titled “Final_architecture_v2,” last updated a couple of quarters ago.

Documentation is often treated as a one-off task. Maybe a sprint item, maybe a technical writer’s job, maybe something we’ll “get to later.” But for distributed systems, that’s a guaranteed path to stale, incomplete, and sometimes outright wrong information.

And debugging? That’s where you realize just how much you don’t know about the system. You hop between logs, traces, Wiki pages, and Slack threads trying to piece together what happened.

These aren’t just annoying chores: they’re slow, expensive bottlenecks. And they get worse as your system scales.

Where Current AI Solutions Fit…

AI can help when the problem is structured, repetitive, and well-scoped. It excels at summarizing incident timelines, parsing massive log files, filling in documentation templates, identifying anomalous patterns, and generating boilerplate code or test cases.

It’s like an enthusiastic intern. Great when it knows what to do, the instructions are precise and the task isn’t ambiguous.

In the context of distributed systems, AI can help reduce the cognitive load by surfacing insights quickly—if it’s fed the right signals. But the key here is that it needs to be grounded in accurate, real-time system data and used with human oversight.

… And Where They Don’t

Even when grounded in code or documentation, AI has real blind spots, especially in complex systems. Here are three major limitations we’ve run into firsthand:

1. It’s not thorough.

AI can describe what the system does, but rarely why it was built that way.

Good documentation, and good debugging, requires capturing the human context:

Why you chose one architecture over another

What trade-offs you knowingly accepted

Which shortcuts are unintentional technical debt, and which are intentional bets

None of that is in the code. None of it can be inferred from telemetry. And AI can’t hallucinate its way to that insight.

2. It’s not trustworthy.

Even well-tuned models can’t verify their own output.

Let’s say you avoid outright hallucinations, the quality of the output is still directly tied to the quality of the input. If you feed them stale data, you get confident-sounding, wrong answers.

And here’s what most of us are dealing with in the real world:

Legacy systems with spaghetti code

Siloed teams duplicating functionality

Missing or inconsistent documentation

Even if your code is pristine, can you confidently say you have a single, accurate source of truth for your system architecture? Most orgs can’t.

3. It’s not current.

Let’s say you get good output today. Tomorrow, someone merges a PR and suddenly everything changes.

That elegant AI-generated architecture diagram? Now it’s stale. That nicely written API spec? Already out of sync with production.

To be truly useful, AI needs to be plugged into live system data and updated automatically, not just run as a one-time task.

What Actually Works: A System-Aware Approach

To solve problems like stale documentation and time-consuming debugging, instead of starting with AI, I started with real-time system visibility with structured telemetry data. Our live system became our source of truth: we have live dependency mapping and a real-time overview of the entire system architecture.

We then layered on top of that the “seldom-documented-context”: using notebooks and system design reviews we document design rationale, API integration flows, trade-offs and edge cases.

Next step we added deep session replays, so that we could connect frontend behavior to backend traces, logs, and metrics across multiple services. So that debugging becomes about understanding what actually happened, end to end.

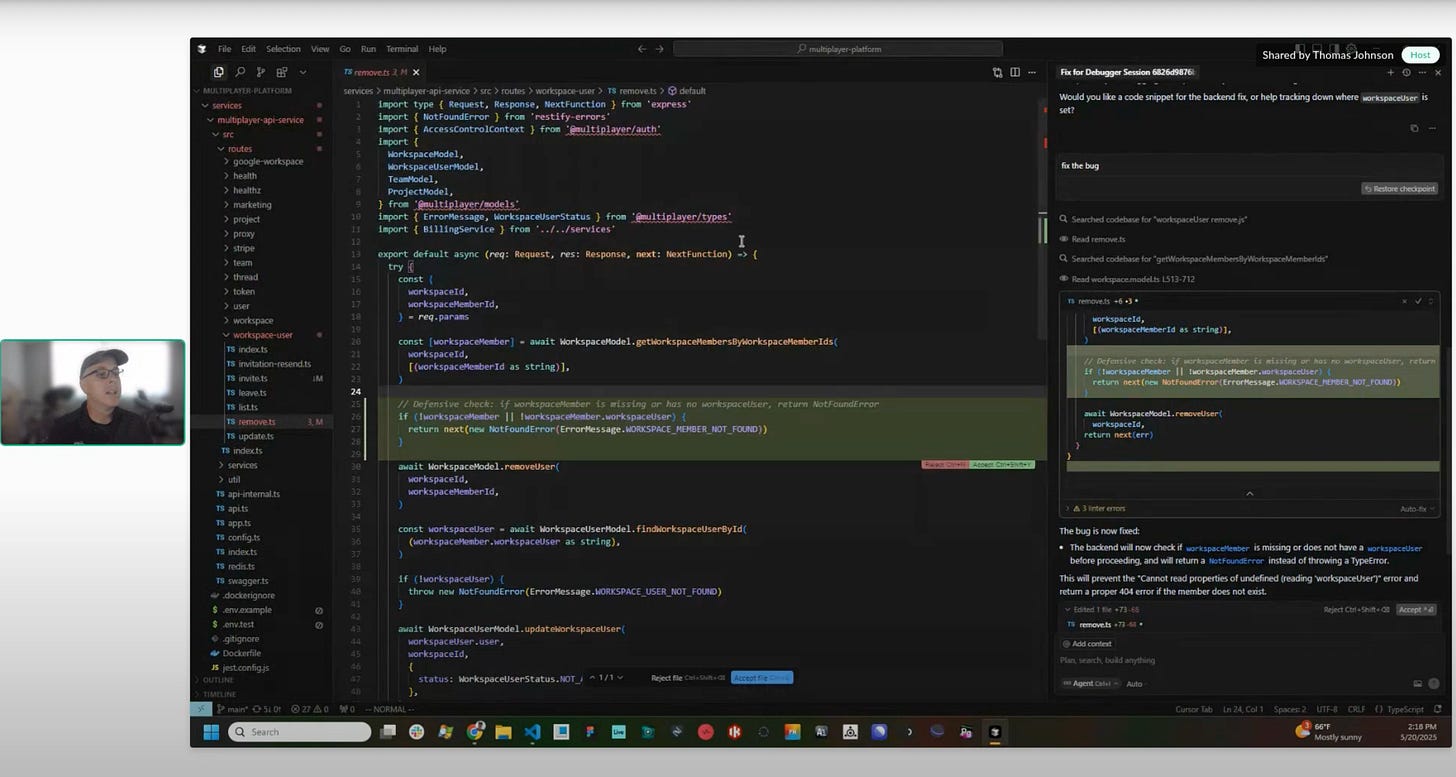

Only once we had the data and the context, we introduced AI: it helps us summarize, navigate, and answer questions about the system and the bugs we identify. Now AI can be useful, because it’s grounded in the actual state of our software.

The Takeaway

AI isn’t a magic wand. But it can augment your team—dramatically—if you combine it with real visibility, shared context, and system-aware tooling.

If you’re building or managing distributed systems, my recommendation is this:

Don’t start with AI. Start with real-time visibility and system-aware tools.

Use AI to amplify what your team is already doing, not replace the hard thinking.

Treat documentation and debugging as continuous, integrated workflows, not isolated events.

That’s the only way to scale understanding and maintain velocity as systems grow more complex.

I demo’ed how we use AI to fix bugs here if you want a peak:

📚 Interesting Articles & Resources

Technology as a tool, not a solution: strategic integration for business success - Andre Ripla

This article emphasizes that technology should be viewed as a tool to achieve business objectives, not as a standalone solution. It warns against adopting new technologies without a clear strategic plan, highlighting risks such as wasted resources, employee resistance, and security vulnerabilities. Ripla advocates for thoughtful implementation and alignment of technology with broader business goals to drive success.

Duolingo’s Gerald Ratner Moment? - Rob Bowley

Rob Bowley draws parallels between Duolingo's recent "AI-first" strategy announcement and the infamous misstep by Gerald Ratner, who once devalued his own products publicly ("How can you sell this for such a low price?", I say, "because it's total crap."). Duolingo faced backlash after announcing plans to replace contractors with AI and making AI proficiency a key performance metric. The move led to negative customer reactions, social media criticism, and a perceived decline in product quality. Bowley underscores the importance of aligning technological advancements with customer expectations and maintaining trust.

The Law of Unintended Consequences: Shakespeare, Cobra Breeding, and a Tower in Pisa - Shane Parris

This article explores how actions, especially in complex systems, can lead to unforeseen outcomes. It categorizes unintended consequences into unexpected benefits, unexpected drawbacks, and perverse results. The piece highlights historical examples, such as the "cobra effect," where well-intentioned policies (a reward per captured cobra) led to adverse outcomes (breeding of cobras for profit).